Highlights:

- With the integration of newly AI-optimized features in Arista EOS, the Arista Etherlink AI portfolio can support AI cluster sizes spanning from thousands to hundreds of thousands of XPUs.

- Every Arista Etherlink switch aligns with the standards developed by the Ultra Ethernet Consortium.

This week commemorates the 10th anniversary of Arista Networks Inc. being publicly traded. To celebrate, the company hosted a special event at the New York Stock Exchange, which trades ANET stock and was one of Arista’s early customers.

The celebration at the NYSE wasn’t the only way the company marked the IPO anniversary; Arista also announced some artificial intelligence-related news.

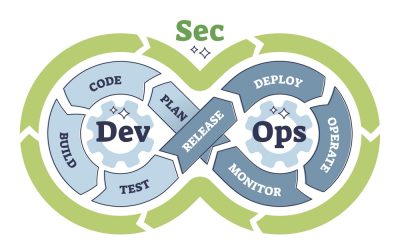

Recently, Arista launched Etherlink AI platforms, designed to boost network performance for AI workloads like training and inferencing. Featuring new AI-optimized capabilities in Arista EOS, the Arista Etherlink AI portfolio can support AI cluster sizes ranging from thousands to hundreds of thousands of XPUs. Utilizing efficient one- and two-tier network topologies, it delivers superior application performance compared to complex multi-tier networks and offers advanced monitoring capabilities, including flow-level visibility.

Many vendors misuse the term “non-blocking.” It refers to a switch where all ports can operate at full capacity simultaneously. Typically, switches assume this level of performance won’t be necessary, which is often accurate. As a result, vendors create switches that partially block traffic and then retransmit to prevent congestion.

A “blocking” switch would have negative marketing implications, so vendors use terms like “near non-blocking,” which can be misleading. For AI workloads, any degree of blocking can cause performance issues, making it crucial for network engineers to invest in fully non-blocking products.

The Enhancements to Arista’s Etherlink AI Platforms

Martin Hull, Vice President and General Manager of Cloud and AI Platforms at Arista shared the specifics of Arista’s Etherlink platforms, designed to address the distinctive requirements of AI networking, comprising the 7060X6 AI Leaf, 7800R4 AI Spine, and the 7700R4 AI Distributed Etherlink Switch.

- The 7060X6 AI Leaf switch family, currently accessible, employs Broadcom Tomahawk 5 silicon, providing a capacity of 51.2 terabits per second, supporting either 64 800G or 128 400G Ethernet ports.

- The 7800R4 AI Spine, currently undergoing customer testing and slated for release in the latter half of the year, represents the fourth iteration of Arista’s 7800 modular systems. It incorporates Broadcom Jericho3-AI processors with an AI-optimized packet pipeline, providing non-blocking throughput utilizing a virtual output queuing architecture. It can support up to 460 terabits per second in a single chassis, equating to 576 800G or 1152 400G Ethernet ports.

- The 7700R4 AI Distributed Etherlink Switch, currently undergoing customer testing and scheduled for release in the latter half of the year, targets the most extensive AI clusters. It boasts massively parallel distributed scheduling and congestion-free traffic spraying, leveraging the Jericho3-AI architecture. Representing a new line of ultra-scalable, intelligent distributed systems, the 7700R4 ensures the highest sustained throughput for extremely large AI clusters.

The company stated that a single-tier network topology utilizing Etherlink platforms can accommodate over 10,000 XPUs, while a two-tier network with Etherlink supports more than 100,000 XPUs. Arista emphasized that decreasing the number of network tiers improves AI application performance, diminishes the need for optical transceivers, reduces expenses, and boosts reliability. Given concerns about power management with AI, this approach can partially mitigate the escalation.

All Arista Etherlink switches are designed to be compatible with the upcoming Ultra Ethernet Consortium standards. This compatibility is expected to yield additional performance advantages once UEC NICs are released.

Moreover, as per the company, Arista’s EOS and CloudVision suites play vital roles in the new AI-centric networking platforms. These suites encompass features for networking, security, segmentation, visibility, and telemetry, delivering robust and dependable support for high-value AI clusters and workloads.

Closing Considerations

Arista is significantly investing in its transition to the AI domain and has been forging closer ties with Nvidia Corp. The outcomes are evident: the company is demonstrating the significance of Ethernet despite assertions favoring Infiniband for AI. It’s apt that Arista chose the NYSE as the venue for the event, as among all network vendors, Arista has excelled in aligning its product vision and roadmap with the evolution of AI.